AI in GxP Systems

AI in GxP Systems:

a new paradigm for CSV, Quality, and Data Integrity

Sarkar C. et al., International Journal of Molecular Sciences, 2023, 24(3), 2026. Licenza CC BY 4.0.

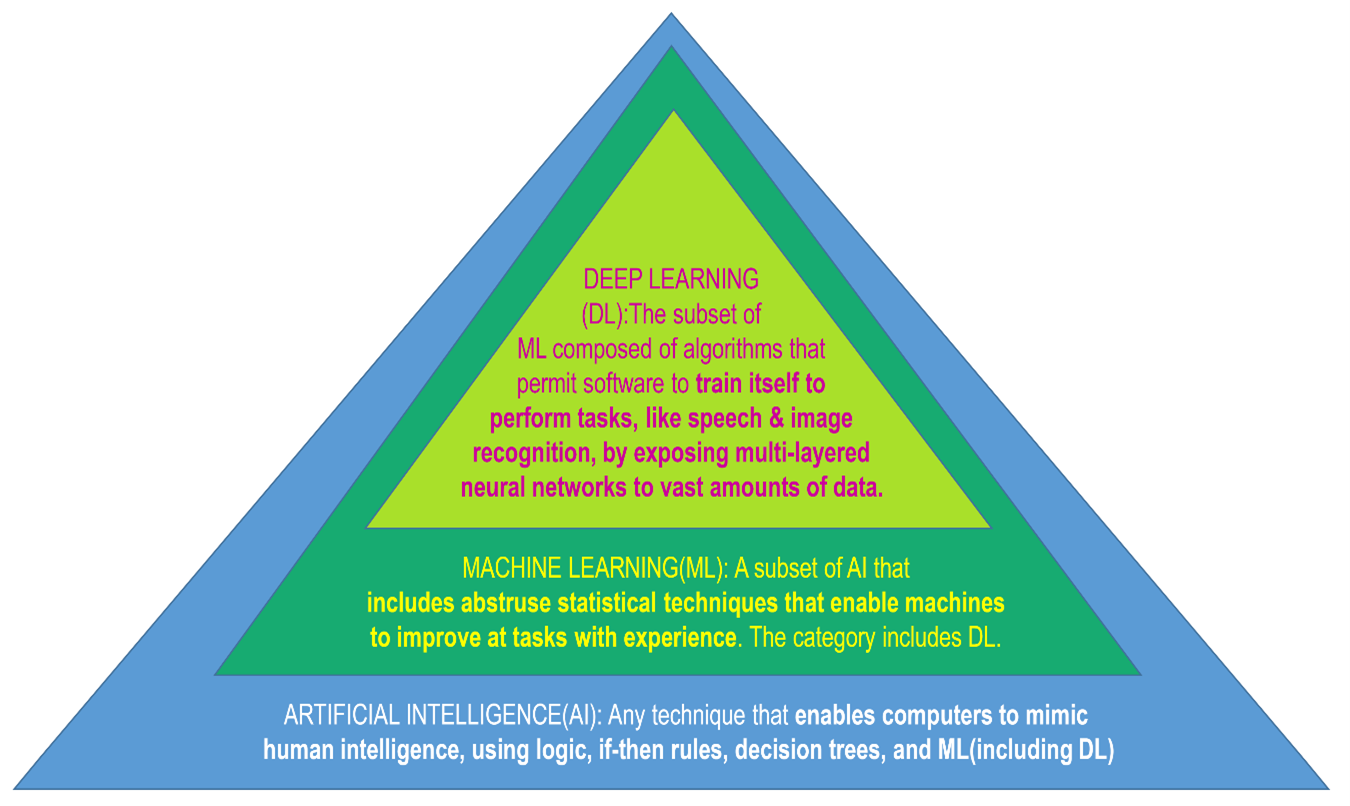

This shift in nature requires broadening the traditional validation approach. Guidelines published in recent years, i.e. GAMP 5 Second Edition and the 2025 GAMP AI Guide, clarify that the evaluation of an AI-based system must also include the model, the training data, the training techniques, the hyperparameters, and the way model stability is monitored over time. It is therefore not about adding yet another document to the validation package, but about expanding the scope to include elements previously considered external to the concept of "software".

The underlying regulations remain unchanged. 21 CFR Part 11 and Annex 11 continue to require complete audit trails, access controls, data-security mechanisms, and record-integrity safeguards. However, AI introduces additional risks, i.e. dataset bias, performance degradation during real-world use, or excessive dependence on specific operating conditions. All these aspects require new controls.

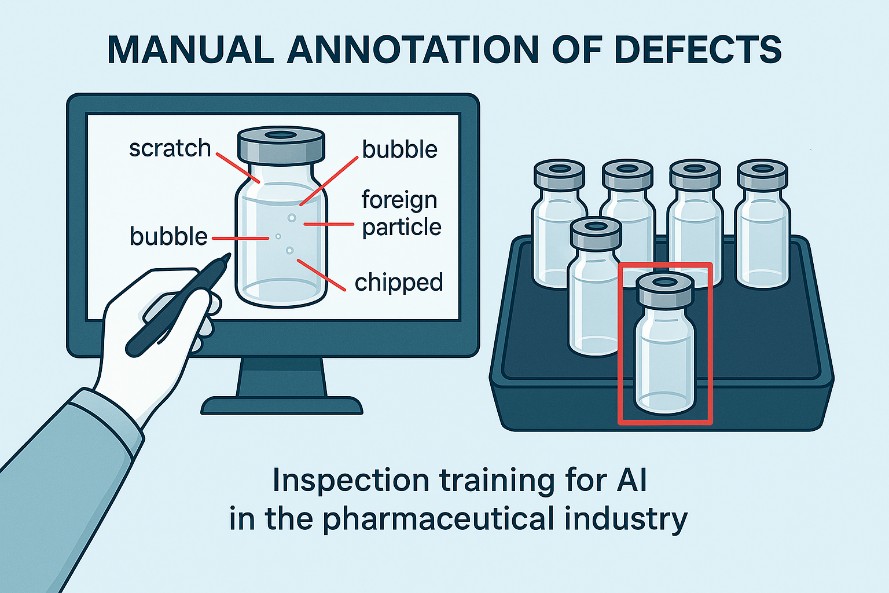

To better understand this transformation, a concrete example is helpful.

Consider a visual inspection system for vials or bottles. Before AI can be used in production, it must be trained on a large number of images.

These images are collected from a representative batch and analyzed one by one by trained operators. Each image must be manually labeled, identifying defects such as scratches, chips, bubbles, particles, micro-fractures, stopper anomalies, or incorrect fill levels. It is a slow and meticulous activity, but it is the most important phase of the entire process: this set of labels represents the truth on which the model will learn to distinguish compliant products from non-compliant ones.

During training, the neural network reprocesses the entire dataset multiple times. It compares its predictions with the labels provided by the operators and gradually adjusts its internal weights. Iteration after iteration, it reduces error and improves its ability to recognize defects. Training ends only when performance stabilizes. At that point, the model is frozen and can be deployed in production. However, even this is not enough.

At the European level, the 2024 AI Act adds another layer of responsibility. The regulation introduces a risk-based classification and requires high-risk systems, into which many GxP applications fall, to comply with stricter rules regarding data governance, robustness, documentation, and human oversight. This approach integrates well with validation logic and pushes companies toward more mature processes.

For those working in CSV, this does not mean reinventing everything, but shifting the focus. The familiar activities remain, but they must be applied to a different object: no longer only deterministic software, but models that evolve over time, depend on data, and require continuous monitoring. As a result, it will be necessary to evaluate dataset representativeness, control data transformations, define objective acceptance criteria for models, manage behavior changes, and implement continuous performance monitoring. Data Integrity also expands: beyond classical principles, the traceability of the training dataset, the explainability of model decisions, and the verification that data are fit for purpose all become essential. These aspects require an organization with well-defined roles and responsibilities and a "2.0" data-driven culture.

Article authored by Andrea Bussi - CSV Business Unit Manager, S.T.B. Valitech S.r.l.

- GAMP 5 - A Risk-Based Approach to Compliant GxP Computerized Systems (Second Edition). 2022.

- ISPE GAMP Guide: Artificial Intelligence. 2025.

- Sarkar C. et al. Artificial Intelligence and Machine Learning Technology Driven Modern Drug Discovery and Development. International Journal of Molecular Sciences, 2023, 24(3), 2026. [https://www.mdpi.com/1422-0067/24/3/2026] License: CC BY 4.0.

- European Medicines Agency (EMA). Guiding Principles of Good AI Practice in Drug Development. [https://www.ema.europa.eu/en/documents/other/guiding-principles-good-ai-practice-drug-development_en.pdf]

- European Commission. EudraLex Volume 4 - Annex 22: Artificial Intelligence (draft). [https://health.ec.europa.eu/document/download/5f38a92d-bb8e-4264-8898-ea076e926db6_en?filename=mp_vol4_chap4_annex22_consultation_guideline_en.pdf]

- S. Food and Drug Administration. 21 CFR Part 11 - Electronic Records; Electronic Signatures. [https://www.ecfr.gov/current/title-21/chapter-I/subchapter-A/part-11]

- Artificial Intelligence/Machine Learning (AI/ML)-Based Software as a Medical Device (SaMD) Action Plan. 2021. [https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-software-medical-device]

- European Medicines Agency (EMA). Reflection Paper on the Use of Artificial Intelligence in the Medicinal Product Lifecycle. 2021 [https://www.ema.europa.eu/en/documents/scientific-guideline/reflection-paper-use-artificial-intelligence-medicinal-product-lifecycle_en.pdf]

- European Union. Artificial Intelligence Act [https://artificialintelligenceact.eu]

- Official Journal of the EU [https://eur-lex.europa.eu]

- Nagy, Zsolt. Artificial Intelligence and Machine Learning Fundamentals. Packt Publishing, 2018.

- Musiol, Martin. Generative AI: Navigating the Course to the Artificial General Intelligence Future. John Wiley & Sons, 2024.

- European Commission - EU Annex 11 (GMP Guidelines) [https://health.ec.europa.eu/system/files/2016-11/annex11_01-2011_en_0.pdf]

- European Commission - EudraLex Volume 4 (GMP) [https://health.ec.europa.eu/latest-updates/eudralex-volume-4_en]